A memorable informal meeting from April 2007. Time to blog it. Having transferred from email to word to blog, this is McDawg’s longest post to date.

After downloading numerous maps about how to get from a railway station I had not been to before and walk about 1.5 miles to a travelodge that I had not been to before, I thought I was well equipped ahead of this meeting.

After getting off the train in no mans land, I only had one fellow passenger who got off the train with me to point me in the right direction. Getting from A to B on foot was obviously possible (they are both on land as far as I am aware) but would involve traversing motorways and the like. He pointed to a phone box and suggested I call a cab. Excellent idea but us Scottish are notoriously tight fisted. With no taxi numbers on me and none in the phone box (rare to even find a phone box these days) Hhmm.

After a few minutes, my taxi arrived from nowhere. £4 later – I was transported from A to B.

Not anticipating this 30 minute time warp into the future, I scoured the travelodge for Dr Lon Jones and his wife but the results came back negative. I went out for a smoke. 3 puffs in and a gentleman came round the corner. When I spoke with Lon on Tuesday, I told him that I had seen his picture on the web and as such, I would be able to recognise him. This gentleman certainly looked like him from a distance. As he came closer ……………. yep, that’s him. “Hi Lon” I said as he sailed by me. “Graham?” he said. “Your early” he said looking at his timepiece. I explained (pointing at map at the time) how I had just had a time warp experience in a mystery vehicle called a taxi (a little travelled in but handy vehicle used sparingly by Scotsmen unless blind drunk with no other option to get from B to A).

He left me to finish my cigarette but clearly was unaware that such an experience at a leisurely pace not uncommonly can take a few minutes. As I stubbed out the offending substance, he re-appeared at the door as I entered the Travelodge in no mans land.

He was quizzical about precisely where I had seen his photo. (Ed – from the Xlear website !!)

Dr Lon Jones and Jerry Bozeman

I said that I could not recall where I had seen it. Clearly, he and Mrs Jones had had a conversation vis a vis this issue whilst I had been puffing away outside. He said, “you probably wouldn’t have recognised my wife then (as I shook her hand) as she’s grown her hair.” Indeed this was a factually correct statement to make.

Chapter 1

We proceeded to move to a quiet table away from the burl of the current residents and visitors of the Red Deer Village, Innkeeper’s Lodge, Cumbernauld, Glasgow. Being situated (Ed – A80 actually) just 200m away from the busiest Motorway in Scotland, no deer were visible but they certainly would have been coated in red were they to roam around such a highway.

Mrs Jones was supping on the last drops of a pint of fine ale. After some brief opening pleasantries, Lon asked how I had become involved in CJD. I said that I thought he might ask that and had flung together the previous night some documentation that might be useful during our discussion. I responded with “what sort of music are you guys into?” and proceeded to ruffle through my rucksack for a CD that I thought would be a good closer but turned out to be a great ice-breaker. A copy of “Steck – The Best Bitz” (20 track compilation of work I recorded from 1985 – 2000). Took them both completely by surprise by this. Mrs Jones now known as Jerry mentioned that their hire car has a CD player but they have no CD’s. As such, whilst Lon’s musical taste in particular not in line with the content of the CD, at the very least, this will get listened to. They’re off to Iona the day after this discussion and after that, Eire and N Ireland.

Chapter 2

In contrast to previous face to face discussions with newish contacts, surprisingly, I took little notes – indeed, less than a page over the space of three hours intense conversation. Jerry I sensed was also a PhD. Indeed, she is a registered and practising psychotherapist. After revealing the nature of my day job, I jokingly suggested that maybe I could refer some of my clients to her. In part this was taken seriously until they both realised the irony of my remark. Both are clear thinkers.

Down to business and more ruffling of aforementioned rucksack or “rucky” as is referred to in this locale.

Now back home, the content of my rucky is much lighter and out of sync (content wise) than when it left these four walls this morning. I would add that I am pleased to still have four walls and a floor after my downstairs neighbour removed a large wall yesterday. Stewards’ enquiry is currently at stage two. Not as bad as initially suspected.

Dipping into sections 3 & 4 of rucky yielded the best documentation. In the meantime, “Pentosan Polysulphate by Linda Curreri” was eloquently passed over to Lon. This was my own personal copy as I was unable to locate the second copy that Linda sent to me which was originally destined for Ian Anderson from Jethro Tull who gets a mention in the foreword.

The “Timeline” of events document from 2000 to the current day was a great guider from time to time during the discussion. To answer Lon’s gambit, I briefly mentioned my brother and after a two year time lag, my band split up and it was maybe time (after 20 years in the semi-prof and amateur business) to try something else. I explained how I had been called a week after the band break up to consider being Vice-Chair of a (later to become Charity) CJD Support Group. I went onto explain that at the tender age of 32, to me, it seemed like an interesting but challenging role for me to consider, let alone accept. How right I was!!

Chapter 3

Lon intensely read Linda’s book and recalled that he had first heard from her (from memory) around 1999 – 2000. I remarked that I had not connected with Linda until 2003 but had remained in contact with her on a very regular basis right up to the current day. It became Xlear, sorry, clear that Lon had missed an opportunity to drop by and meet Linda face to face when he and Jerry were in New Zealand two years ago. They do however plan to return and most certainly will wish to meet this courageous and intensely interesting individual next time around. Not that it seemed to be required but the writer encouraged such an operchancity.

With Lon still engrossed in Linda’s book, I spoke at some length with Jerry about music and Church organs none the less. Personally, I had only played one about twice back in the Eighties when our, at the time Rev’d, was not in the building.

After a brief (but it ties in) relapse into the beginnings of my musical training at the piano and more importantly, keyboards etc etc, we went back to business and Jerry picked up again, her copy of today’s “The Independent” courtesy of the travelodge. Later on, I myself picked a free copy of this 70p ‘newspaper’, found little news but pages upon pages of advertisements. (These days, If I want news, I usually use the Internet.)

Chapter 4

My guests are both Vegetarians – I am not – omnivore. Having studied the menu, this was a vegetarian’s nightmare. Being polite (the cheeseburger option was most tempting), I went with the flow and three carrot/coriander/leek soups and crusty bread were swiftly delivered and quickly consumed. A second round could easily have been eaten up.

Unexpectedly, our (three hour) discussion transpired to be led namely by myself. It turned out that Lon was namely interested in anatomy related matters, but also very interested in issues namely such as preventative measures and how to rebalance internal human environments when faced with rogue bacterium and proteins.

I mentioned my contact with Dr Ellie Philips and how she had kindly sent me a shipload of Xylitol products and brought out some mints and gums. Lon quickly produced his own supply of Xylitol gum and an all round teeth cleansing moment took place. After all, in dental terms, this was one of the direct points of having this discussion. We practice what we “preach” type thing I suppose.

Lon knew a bit about ‘Prion disease’ but not much on the anatomy. The best way to respond was to describe the apparent misfolding of normal protein PrPc (of which little but some is known about) and the “misfolded” version until recently described as PrPsc. Now knee deep into scientific matters, Jerry walloped down a rather tasty looking Irish Coffee and went upstairs for a well earned rest judging from what I could gather.

—

With Lon now interested in “Prions” and the Nobel Prize Winner who coined a name for something that does not appear to actually exist (with certainty), he became more than interested on what we were trying to do to stop the relentless progression of the disease (PrPd) in humans specifically in Pentosan terms.

At this point, it was reasonable to re-dig my rucky and pull out further documentation. In the end, all “cards” were on the table (literally) and I told him to take away whatever he wanted. He did and thanked me for being so open.

Chapter 5 – The Book

My Xylitol contact list. This was and still is a working document, i.e., it is incomplete and subject to change. This was of immense interest to Lon. Lon (as I knew already) has a Patent on his Xlear Nasal Spray. Osmotically speaking, this is where everything made total and complete scientific sense. So, fighting bacterium is what mainly drives Lon. If I spin back to part of what he wrote to me if February, this now makes better sense.

“I am working on a book that deals with shifting our paradigm from the mechanical model to a complex one that honours the adaptations we and our evolutionary ancestors have made that helps us to deal more effectively with hostile agents in our environments. The billion dollars made by blocking such adaptations (fever, diarrhoea, rhinorrhea, to name just a few) is a large force that will not take this kindly”.

Around this time, Lon expanded upon his current book. This largely is complete pending a publisher that is sufficiently interested in making this widely available.

Currently dubbed “K C”, this book is about the Complex Adaptive System (previously dubbed CAS) When rogue bacterium enter their new “host”, they are extremely good at self replication and in certain terms millions of times over. Lon’s work deals with bringing internal environments back to their natural status and thus taming unwanted host particles. The rampant smart particles do not/never anticipate such a fight back and eventually go on to die. The system can therefore be restored back to normality not by fighting the bacterium, but by reinforcing the natural environment.

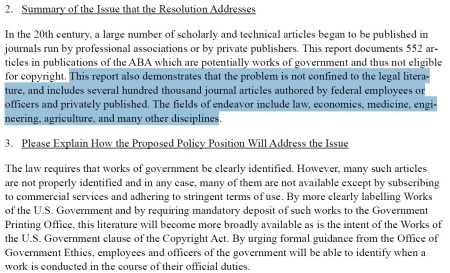

Does this work? It might do. Has this been tried? Yes. See here. Specifically, in relation to ear infection, a successful clinical trail (“n” of ~ 160 measured via similar “n” control) took place around 2005 but not accepted for publication. Since I am now aware that unpublished material can be less, equally and/or more interesting than published material, I look forward to viewing this section of the Author’s currently unpublished material. On the face of it, Xylitol as already very well documented in fighting off bacteria that causes dental decay is apparently effective when delivered intranasally.

Fully aware that Lon has patented this, this was not surprising. That said, in the commercial world that we live in, this was not surprising. Indeed, Lon mentioned a team in the US that cottoned on to “CAS” and intranasal Xylitol and carried out a clinical trial. Kind of handy for Lon when he found out and told them that he had the Patent.

Chapter 6

With the contents of my rucky pretty much now everywhere, but some still not on the table Lon looked at what was still in rucky. I told him to look through – no problem.

Around this time, we spoke about a number of issues but preventative measures came up several times. He mentioned a book that he and Jerry had just finished reading by a Dr Colin Campbell entitled “The China Study”. This I must check out.

My new friends seemed quite taken by their copy of handout “CJD Alliance Glasgow 2006” In particular; Lon was extremely interested in connecting with the Chair of that discussion, Dr Mark McClean. Moreover, Lon was I sense quite taken by Dr McClean’s summary from the Minutes of the discussion:-

“What have we achieved this afternoon?”

1. Three Medical Presentations. One on diagnostics, one on therapeutics and one on the problems inherent in tackling the different TSE strains. We asked today’s most important question – “what about systemic PrPd?”

2. The Legal Presentation – a vital branch in the past and present multidisciplinary approach to CJD/TSE. Mr Body’s success reflected his specialisation in the field – something our National Health Service should mirror.

3. Inevitable improvement of future research by our interested parties as they take away new knowledge acquired at this afternoon’s Discussion.

4. Further essential ‘networking’ between various interested parties – not to be underestimated.

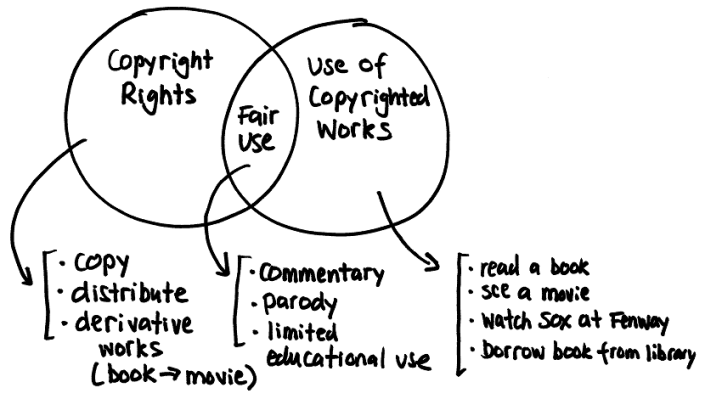

Before closing this discussion, a number of points came to mind. Open Access in terms of medical research had been touched upon but I wanted to raise this again. Lon mentioned that he had received three requests for his first published Paper and zero for his second. We warmed superbly to the philosophy of Open Access via the Internet and asked how long I had been using the web. Six years. “Do you use Google to search for medical research?” Lon enquired. No, at the moment, PubMed but also now Open Access outlets and stressed the point that in Abstract form, it is impossible to fully evaluate a Paper. Having mentioned my recent conversation and contact with Peter Suber, Lon vigorously warmed to the OA philosophy and fully intends to make direct contact with him. Excellent news.

Having shared with Lon a copy of my Xylitol contact list, I sense he was a bit taken aback by this. Whilst he has a hard copy, I stressed that this was an e-document that contains many many active links. Copy now emailed to him.

Lon made mention of his Son Nathan and told me a bit about him. I asked if he had any other children. Thirteen. I thought he was being whimsical. No, he has fourteen kids via his first wife. Wow. For a period of around twenty odd years, he was certainly active. This man certainly has the largest family that I am personally aware of.

Chapter 7

As we closed up, I took stock. I had and indeed have a number of ‘action points’ that I will follow up. These were unexpected but much welcomed. Despite doing most of the talking, I had spent the afternoon with an extremely intelligent but somewhat reclusively mind mannered man. Whilst we may not meet again, we will certainly keep in touch – no question.

Would I require to conjure up another mystery taxi I was thinking? Thankfully no as Lon kindly offered to drive me back to the railway station in no mans land. As it happened, my main map came in rather handy after all and our direct route happened to be about twice as short at that of the one used by the mystery taxi driver !! This appears to be global phenomena. As such, the aforementioned comment about why Scotsmen only use such means of transportation sparingly. I rest my case.

As we arrived at my station, my twice an hour (I had no idea when) mystery train was there and just about to head off. A hasty goodbye and off I ran and JUST managed to board.

Goodbye Lon & Jerry

THE END

July 25th, 2008 at 8:21 am […] has already posted yesterdays chat about OpenScience for our Extraordinary Everyday Lives podcast. It was a beaut chat with Graham and […]

August 4th, 2008 at 9:28 pm […] Extraordinary Everyday Lives #053 Open Science […]

August 9th, 2008 at 10:05 pm […] discussion (thanks to Graham and Richard) on my last Extraordinary everyday lives podcast (episode 53), there seems to be some interest in connecting the event in London with people in other geography […]